Media Containers

Certainly, most of us have heard about media containers. Multimedia file extensions such as mp3, mp4, and mkv are well-known and recognized—especially by the millennial generation, which in their youth frequently exchanged and shared files known as MP3s.

In brief, a media container combines various types of data. This includes the main video stream, one or several audio tracks (e.g., for multilingual materials), and subtitles, which there can also be multiple of. Additionally, a container is tasked with storing metadata about our stream. This involves a detailed description of the video and audio codecs used, the placement of keyframes in the file along with their timestamps—essentially everything that is necessary for playing the movie, as well as for its fast-forwarding and resuming.

There are many types of media containers like mp4, mov, mkv. Some, such as 3gp, are no longer commonly used, while others, like fMP4, are based on earlier solutions or standards and are still being developed today.

In this article, I will describe what the process of creating a multimedia file looks like, what types of containers there are, their characteristics, and their contemporary applications.

Creating a media container

- Encoding and Decoding

The first step in creating a multimedia file is encoding, which involves converting the source of image and sound into a special byte format. How these data are recorded and processed is the role of both video (e.g., popular H.264) and audio codecs (AAC). They define parameters such as image resolution, bitrate, and frame rate. Similarly, audio codecs define aspects like frequency, sampling, and the number of channels. The data we receive as a result of encoding, such as video, are limited. Our video bitstream contains only image data. Sound is recorded completely separately. Additionally, information required to decode such a recording, i.e., to play it back, is also included.

In our article titled “How video encoding works,” we describe in detail the process of video encoding, so if someone would like to learn more about this process, we invite you to read this material. - Multiplexing and Demultiplexing

At this stage, the audio and video paths are two separate pieces. Our task now is to combine them together. This process is called multiplexing, and the way it is implemented is directly defined by the container itself. Typically, we deal here with blocks of video and audio data that are temporally correlated. This allows the player (player) not to wait until the entire multimedia file is downloaded to be able to play both tracks together. During this process, metadata is also created, most often defining a list and location of keyframes in the stream along with assigning them the appropriate time stamp (i.e., Metadata). Thanks to this, wanting to play a film from, for example, the 5th minute, we can find the right place in the video and audio bitcode and start playback from there or the closest moment. The process opposite to Multiplexing is Demultiplexing, which, as the name suggests, allows us to “extract” a specific multimedia track.

Metadata is most often located at the beginning of the file so that during the download of the file, this information is available as soon as possible. If you have ever had to wait for a whole movie to download before it could be played— the reason for this “ailment” is usually the location of the metadata at the end of the file.

In the process of creating a multimedia file, both Encoding and Multiplexing usually occur simultaneously, or one after the other. - Transmuxing and Transcoding

It is also worth mentioning at this stage the process of Transmuxing, which involves changing the container from one type (e.g., mp4) to another (webm, mkv). This process may, but does not necessarily mean also transcoding audio/video to other codecs. Usually, we deal with Transmuxing and Transcoding when creating a version of a particular film or stream for another type of device, which has different capabilities in terms of playable media.

Contemporary Media Containers

- MP4

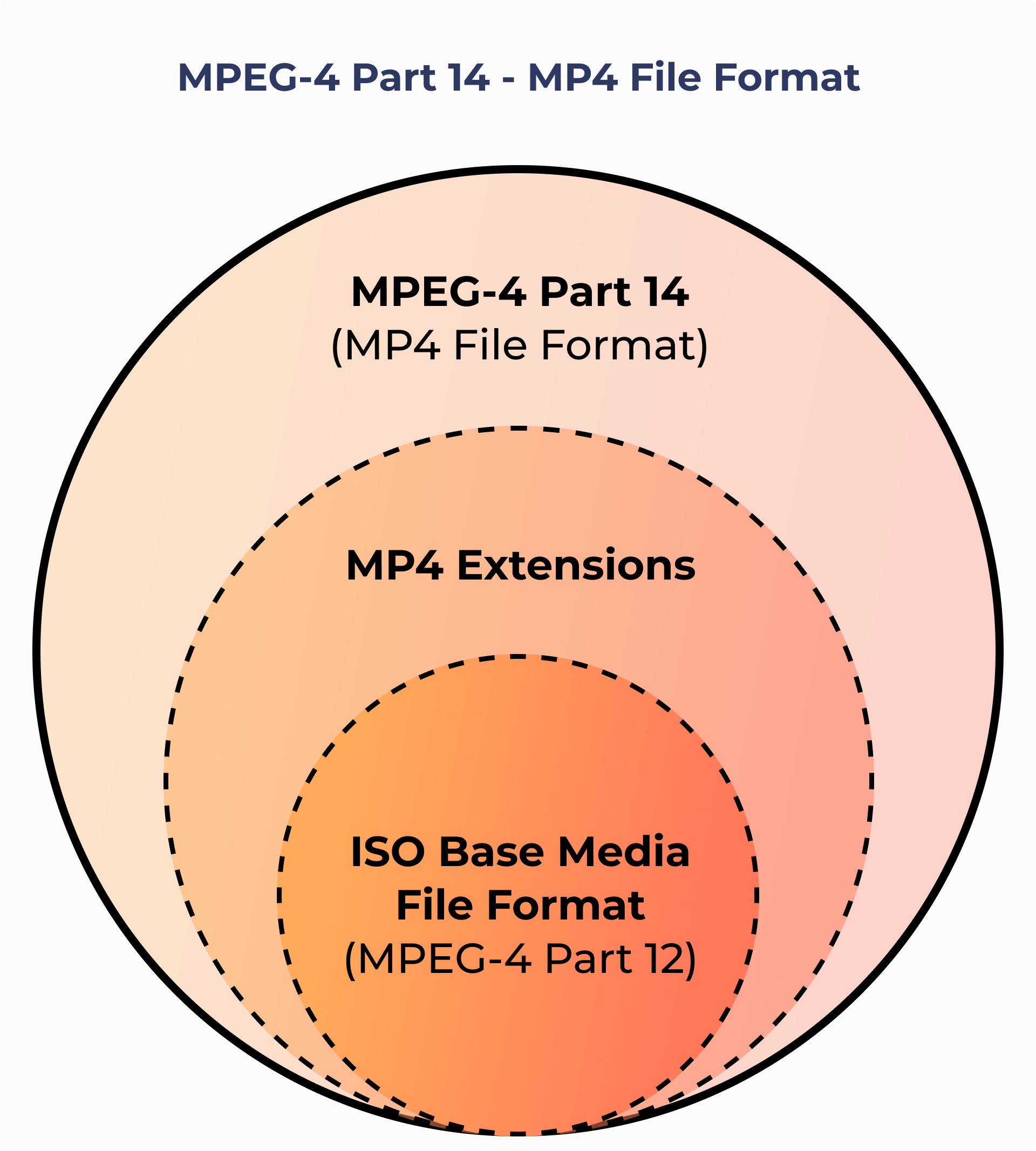

MPEG-4 Part 14, commonly known as MP4, is a widely utilized container format typically signified by the .mp4 file extension. It plays a crucial role in Dynamic Adaptive Streaming over HTTP (DASH) and is compatible with Apple’s HLS streaming protocols.

The structure of MP4 is derived from the ISO Base Media File Format (MPEG-4 Part 12), itself an extension of the QuickTime File Format.

The acronym MPEG represents the Moving Pictures Experts Group, which is a collaborative effort between the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC). This group was established to develop standards for compressing and transmitting audio and video data. Within MPEG-4, there is a focus on the encoding of audio-visual objects. MP4 is compatible with a variety of codecs, with H.264 and HEVC being the predominant video codecs utilized. For audio, the Advanced Audio Codec (AAC) is most frequently employed, serving as the modern successor to the well-known MP3 codec.

ISO Base Media File Format

The ISO Base Media File Format (ISOBMFF), also recognized as MPEG-4 Part 12, serves as the foundational architecture for the MP4 container format. This standard is specifically designed to handle time-based multimedia, primarily audio and video content, which is typically delivered in a continuous stream. Its structure is intended to provide flexibility, ease of extension, and support for the interchange, management, editing, and presentation of multimedia data.

Central to the ISOBMFF are structures known as boxes or atoms. The design of these boxes utilizes an object-oriented approach, where each box is defined through classes. These boxes inherit properties from a generic base class named “Box,” allowing for specialization and the addition of new properties to meet specific functional requirements.

An example of such a box is the FileTypeBox, which is typically positioned at the beginning of the file. Its role is to specify the file’s purpose and how it should be utilized.

Furthermore, boxes can contain sub-boxes, creating a hierarchical tree structure. For instance, a MovieBox (moov), which organizes the file’s content, may contain multiple TrackBoxes (trak). Each TrackBox corresponds to a single media stream, such as one for video and another for audio within the same file. Binary codec data, essential for decoding the media streams, is stored in a Media Data Box (mdat). Each track within the ISOBMFF references its corresponding binary codec data, allowing for the proper rendering of the media content. This structured approach not only simplifies the organization of complex multimedia files but also enhances their accessibility and efficiency in various multimedia applications.

Fragmented MP4

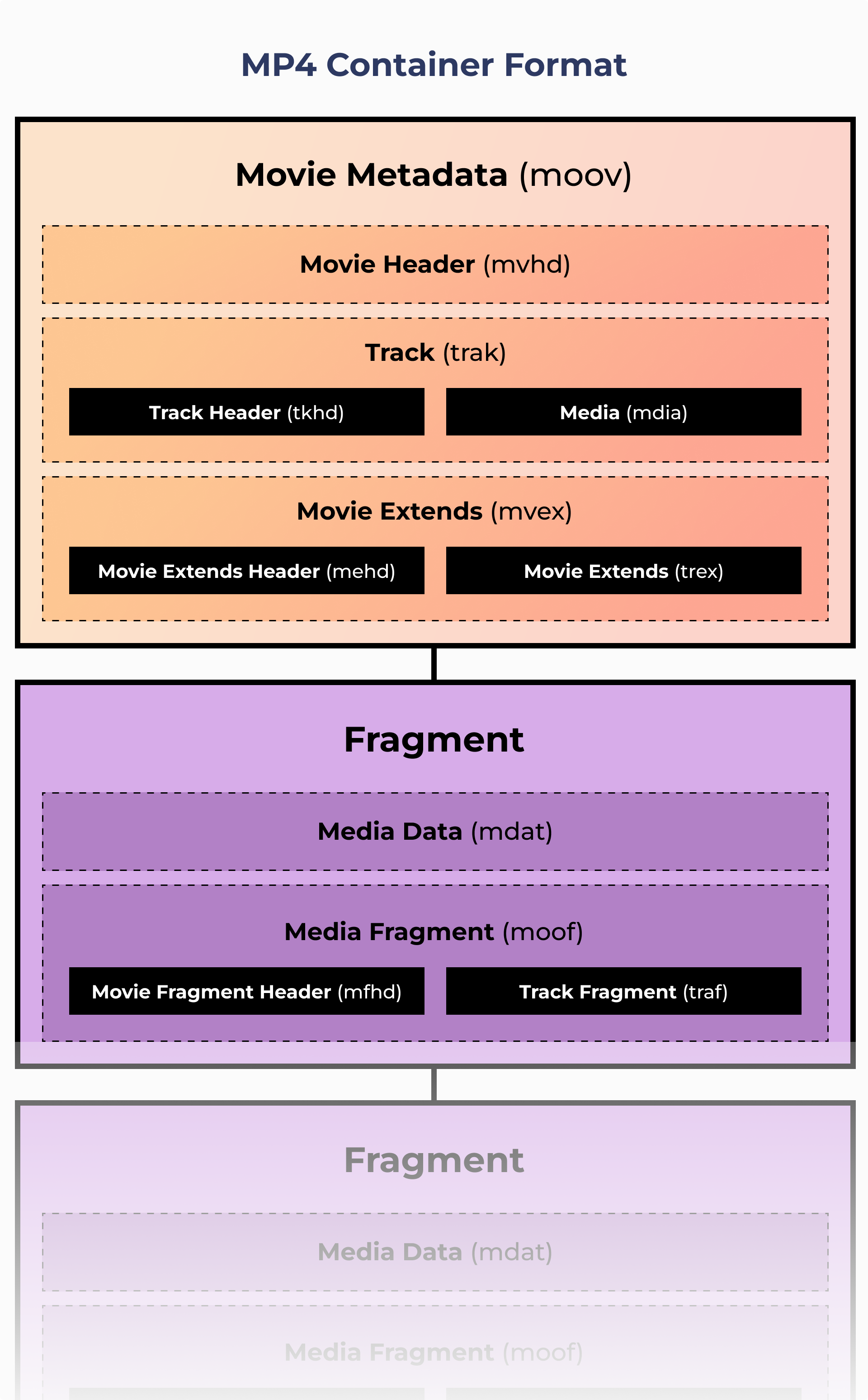

MP4 also facilitates the segmentation of a film into various fragments. This feature is particularly beneficial for using technologies like DASH or HLS, as it allows the playback software to download only the fragments that the viewer intends to watch.

In a fragmented MP4 setup, the typical MovieBox is present, accompanied by TrackBoxes that indicate the availability of different media streams. A Movie Extends Box (mvex) indicates that the movie continues across these fragments.

A further benefit of this structure is the ability to store fragments in separate files. Each fragment includes a Movie Fragment Box (moof), closely resembling a Movie Box (moov), which details the media streams specific to that segment. For example, it carries the timestamp data for the 10 seconds of video held within that particular fragment.

Moreover, each fragment possesses its own Media Data (mdat) box, which stores the actual media data.

- MPEG-CMAF

Distributing content across various platforms can be a complex task when each platform may support only specific container formats.

To disseminate a particular piece of content, it might be necessary to create and provide versions of the content in multiple container formats, such as MPEG-TS and fMP4. This requirement leads to increased costs in infrastructure for content production and also raises storage expenses for maintaining several versions of the same material. Additionally, it can reduce the efficiency of CDN caching.

MPEG-CMAF seeks to address these issues, not by introducing a new container format, but by adopting a single, pre-existing container file format for OTT media delivery.

CMAF is closely tied to fMP4, facilitating a straightforward transition from fMP4 to CMAF. Additionally, with Apple’s involvement in CMAF, the need to multiplex content in MPEG-TS for Apple devices may soon become obsolete, allowing CMAF to be universally applicable.

MPEG-CMAF also enhances the compatibility of DRM (Digital Rights Management) systems through the use of MPEG-CENC (Common Encryption). It theoretically enables content to be encrypted once and be compatible with various leading DRM systems.

However, there is currently no standardized encryption scheme, and there are still competing systems, such as Widevine and PlayReady, which are not interoperable. Nevertheless, the DRM industry is gradually progressing toward a unified encryption standard, the Common Encryption format.

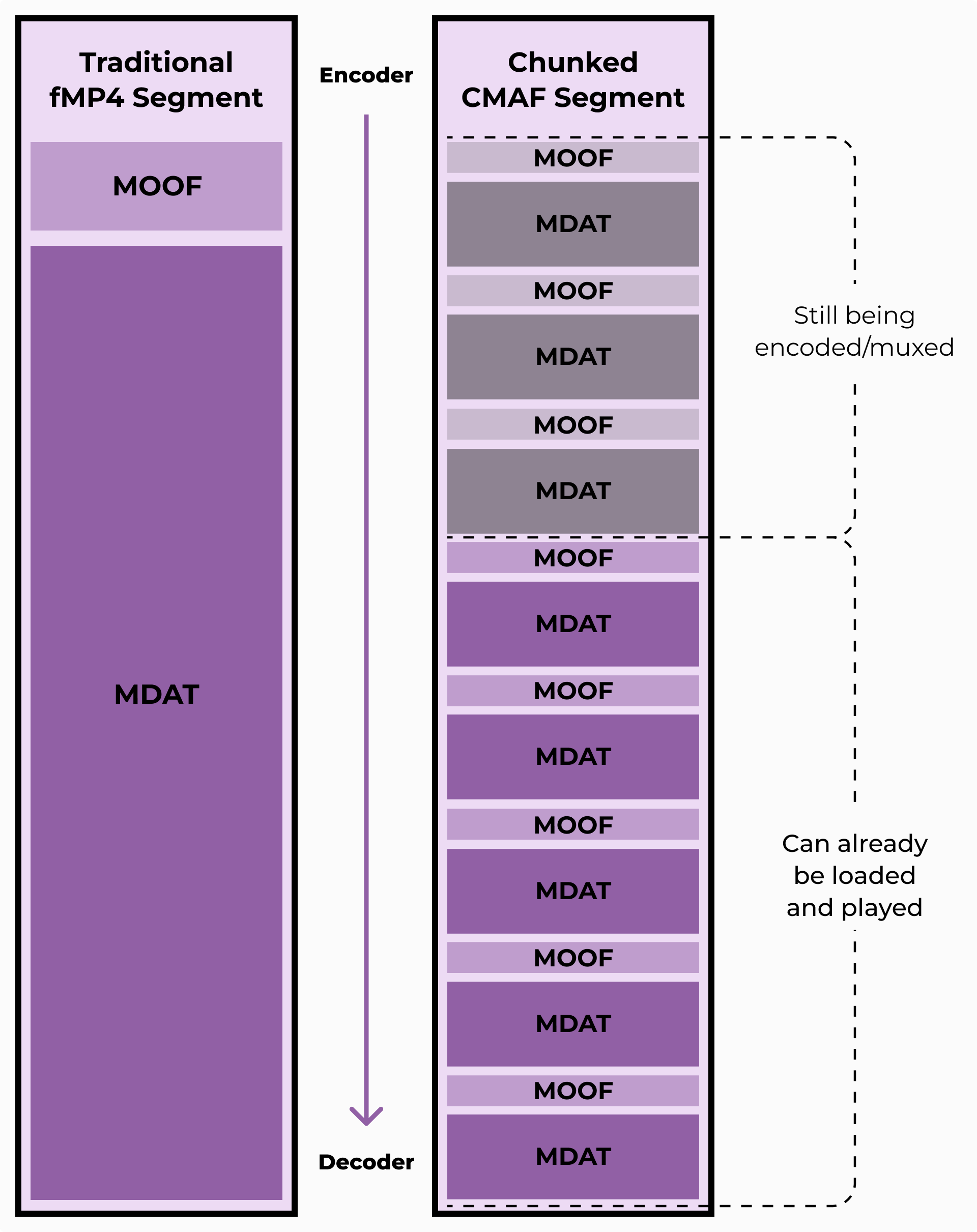

Chunked CMAF

A notable feature of MPEG-CMAF is its capability to encode media in CMAF chunks. This chunked encoding, paired with the delivery of media files using HTTP chunked transfer encoding, facilitates reduced latencies in live streaming scenarios compared to previous methods.

- MPEG-TS

The MPEG Transport Stream was established under MPEG-2 Part 1, tailored specifically for Digital Video Broadcasting (DVB) applications.

Unlike the MPEG Program Stream, which is designed for media storage and utilized in applications such as DVDs, the MPEG Transport Stream is more focused on efficient transport.

The structure of an MPEG Transport Stream is composed of small, discrete packets, which are intended to enhance resilience and minimize the effects of corruption or loss.

Additionally, the format incorporates Forward Error Correction (FEC) techniques, which help correct transmission errors at the receiving end.

This format is particularly suited for use over lossy transport channels, where data loss may occur. - Matroska

Matroska is an open and free container format, rooted in the Extensible Binary Meta Language (EBML), which translates XML into binary format.

This design allows the format to be highly extensible, supporting virtually any codec.

WebM derives from the Matroska container format and was primarily developed by Google. This initiative was intended to provide a free and open alternative to existing formats like MP4 and MPEG2-TS for web usage.

The format was specifically engineered to accommodate Google’s open codecs, such as VP8 and VP9 for video, along with Opus and Vorbis for audio.

Additionally, WebM can be utilized with DASH to facilitate streaming of VP9 and Opus content across the internet.

Containers and Codecs

Different containers can support various sets of codecs. Below are two tables that illustrate the compatibility of the listed containers with respect to the most popular video and audio codecs.

| H.264 | H.265 | VP8 | VP9 | AV1 | |

| MP4 | ✓ | ✓ | ✓ | ||

| CMAF | ✓ | ✓ | ✓ | ||

| TS | ✓ | ||||

| WebM | ✓ | ✓ | ✓ |

| MP3 | AAC | OPUS | |

| MP4 | ✓ | ✓ | ✓ |

| CMAF | ✓ | ✓ | ✓ |

| TS | ✓ | ||

| Matroska | ✓ |

However, it should be remembered that ultimately, it is the player (including the browser) that ultimately decides which codecs it can play and which it cannot.

Summary

In summary, media containers are essential for the organization, management, and playback of multimedia files, accommodating various streams of audio, video, and subtitles along with critical metadata. This article explores the intricacies of media containers like MP4, MKV, and emerging standards like CMAF, detailing their creation processes—encoding, multiplexing, and transmuxing—and their specific roles in contemporary digital media environments. The discussion underscores the significance of choosing appropriate container formats to enhance compatibility, efficiency, and performance in media delivery across diverse platforms and devices.

Storm Streaming Server & Cloud Support media container support

Both Storm Streaming Server and the Storm Streaming Cloud based on it are equipped with proprietary software that enables the creation and reading of various types of containers, including MP4 (especially fMP4) and WebM. This mechanism is used in video transcoding, but also in recording streams to video files, as well as supporting protocols like HLS and LL-HLS.