HTTP Live Streaming (HLS) Protocol

The history of the HLS protocol is directly related to Apple’s entry into the mobile phone market in 2007. One of the basic features of the new iOS operating system and the mobile Safari browser was the lack of support for the Adobe Flash Player plugin. The reasons why Steve Jobs blocked the possibility of supporting the mobile version of Flash are not solely technical, but we won’t be dealing with that today. Adobe Flash Player never made it to devices bearing the Apple logo, and the HTML technology at that time was significantly inferior to this plugin (HTML5 only appeared in 2014). Therefore, Apple had to somehow “patch” the gap in capabilities that Flash offered, especially in terms of video streaming.

So how do you quickly create a streaming protocol? Here, engineers in Cupertino demonstrated great creativity and genius. They thought, why not cut such a stream into “small pieces” and deliver them one after the other? The role of the browser would simply be to stitch these pieces together, creating the impression that we are dealing with a single film. The technology was named HTTP Live Streaming (HLS) and debuted on Apple devices in 2009.

Usage and main characteristics

Today, HLS (HTTP Live Streaming) is one of the most popular protocols used on the Internet. Technically, it is based on HTTP, which in turn is built on TCP/IP. The mechanism of its operation, as we have already described, is quite simple. Streaming typically begins with downloading a .m3u8 file, known as the “manifest” or called the playlist, which contains a range of information about the stream, such as the next segments to download, alternative segments for different resolutions, bitrate, etc. Each of these segments called “chunks” is effectively a separate video file 6-10 seconds long, but the way they are played back by the browser makes it appear as if there are no breaks in the joined material.

Advantages

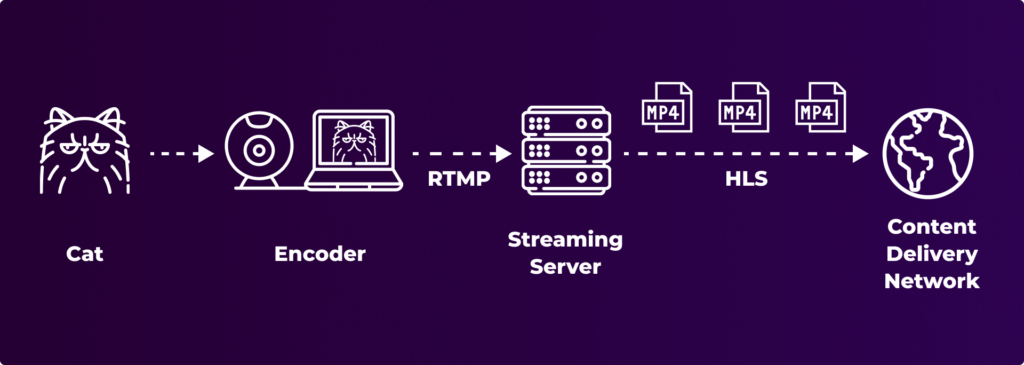

The main advantage of the HLS (HTTP Live Streaming) protocol is its universal support. By using the previously mentioned dedicated Web Video Player, you can play streams on virtually any device. Another advantage of HLS is the ease of scaling. Each mentioned “segments” or “chunks” is effectively a separate file, which can be replicated using a CDN (Content Delivery Network). Often, the role of streaming servers is precisely to produce these “chunks” and send them to an external CDN server.

Other advantages of the HLS protocol include:

- Easy implementation of nDVR technology (rewinding) and adaptive-bitrate streaming (multiple qualities for individual streams), which enhances viewer experience by allowing them to choose video quality according to their internet speed.

- Utilization of the HTTP/s protocol minimizes most issues with firewalls, which often block other types of traffic but generally allow HTTP/s traffic.

- The possibility of using DRM (Digital Rights Management) mechanisms by encoding “chunks” to protect content against unauthorized use.

- For mobile devices, with native implementation, stream handling is very energy-efficient, which is crucial for battery-operated devices.

- In case of an error or playback failure, it is enough to simply re-download the missing stream fragment, which can quickly resolve viewing interruptions without extensive technical intervention.

Disadvantages

Just as HLS has many advantages, the list of disadvantages is also extensive:

- Latency: This protocol is burdened with very large delays of about 6-10 seconds (for LL-HLS closer to 3-4 seconds). Services where interaction between the broadcaster and viewers is important are thus greatly hindered.

- Limited application: The only application of this protocol is to deliver the stream to end viewers. It is not used for broadcasting to streaming servers (ingest).

- Lack of signaling layer: The protocol lacks a signaling layer, which characterizes, for example, RTMP. Thus, we have essentially two states here: either the stream exists, or it does not.

- Limited codec support: HLS has a very limited range of supported codecs, essentially we are limited to H.264/H.265 and AAC.

- Proprietary nature: HLS is a protocol from Apple, which maintains full control over its development.

Supported codecs

The list of codecs supported by HLS is relatively small and also depends on the browser and device used.

Supported video codecs:

- AVC / H.264

- HEVC/H.265

- AV1 (only on some devices)

Supported audio codecs:

- AAC

- AAC-3

- E-AC-3

- MP3

Browser support

As previously mentioned, the HLS protocol is not always supported at the browser level. Typically, desktop browsers do not support this protocol (with Safari being the exception, though it is only available on MacOS systems). However, it is possible to add support via JavaScript using available libraries, such as hls.js. The MediaSource Extensions API, which allows the creation of a video element in the browser’s memory and then plays it, is used to handle this protocol.

Browsers that natively support the HLS protocol:

- Safari (from version 6.0)

- Chrome for Android (from version 124)

- Safari on iOS (from version 3.2)

- Samsung Internet (from version 4)

- Opera Mobile (from version 80)

- Android Browser (from version 124)

Browsers requiring additional libraries:

- Chrome (Desktop version for all operating systems)

- Edge (Desktop version)

- Firefox (Desktop version)

- Opera (Desktop version)

It’s important to note that the use of additional players or libraries to handle HLS depends on access to the MediaSource Extensions API. However, this API is generally available on all desktop browsers.

Protocol mechanics

The mechanics of the HLS protocol are directly based on the popular HTTP protocol. This means that all data is delivered to the browser through successively generated requests. The HLS protocol is based on two types of files. The first are manifest files (*.m3u8), which contain basic stream data. The second type are actual video files in mp4 or ts formats.

A video stream can have multiple manifest files: a single primary playlist, which is historically called a ‘master’ playlist, and multiple media playlists. The primary manifest is the first file that a player requests when video playback begins. This file lists all of the available streaming versions of the video that are available. There are 2 lines per version, and they typically look like this:

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=3500000,RESOLUTION=1920x1080, CODECS="avc1.42e030,mp4a.40.2" /hls/live/test.m3u8

- The first line of this example manifest stores information about the media stream: requires a bandwidth of 3.5 MBPS,

- has 4k video resolution (1920×1080),

- requires codecs describes the codecs avc1.42e030 or mp4a.40.2, so the player can find a compatible version for a web browser.

The second line is a path to where this specific version of the video can be found. Notice that this path points to another m3u8 manifest file, which is the media manifest file. The primary manifest has links that point to each streaming version’s media manifest.

Media manifest lists all of the video segments available for the video stream. Media manifests generally have this structure:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-ALLOW-CACHE:NO

#EXT-X-TARGETDURATION:4

#EXT-X-MEDIA-SEQUENCE:0

#EXT-X-PLAYLIST-TYPE:VOD

#EXT-X-KEY:METHOD=AES-128,URI="aes.key",IV=0x07B1D7401B75CA2937353EC4550FEE5D

#EXTINF:4.000000,

0000.ts

#EXTINF:4.000000,

0001.ts

#EXTINF:4.000000,

0002.ts

#EXTINF:4.000000,

0003.ts

#EXTINF:4.000000,

0004.ts

#EXTINF:0.120000,

0005.ts #EXT-X-ENDLIST

In this example, each segment is 4 seconds long (EXTINF), and there is a link to the file.

Segments can be in formats such as MP4 or TS. In both cases, we are dealing with a container that stores video, audio, and optionally subtitles. The length of a single segment typically hovers around 8 seconds, but with the use of the LL-HLS protocol, it can go down to about 2-3 seconds.

LL-HLS

In 2020, a specification for an updated protocol called LL-HLS (Low-Latency HLS) was introduced. The changes included in this specification are not revolutionary, but they allow at least partial reduction of the protocol’s biggest issue, which is the substantial delays. LL-HLS theoretically reduces latency to about 2-8 seconds by enabling the creation of smaller (shorter) “chunks.” As of now, LL-HLS is included in the base HLS specification. Client devices can decide whether they want to use the newer specification or stick with the base one.

Content Delivery Networks

One of the greatest advantages of the HLS protocol is the ease of using so-called Content Delivery Networks (CDNs) to quickly scale video broadcasts. Most CDN services rely on a very simple mechanism where static files are merely replicated across a large number of servers through a dedicated service (like Amazon, CloudFlare, Akamai). Since all the components of the HLS protocol are static files (manifests and individual segments), replicating such a stream is relatively simple and cost-effective to implement.

For example, we have the Storm Streaming Server application, which generates m3u8 and ts/mp4 files, and then these are transmitted to a selected CDN service. A drawback of this solution is the drastically increased latency, but it is a very cost-effective solution when the stream is intended for tens of thousands of viewers.

Summary

HTTP Live Streaming (HLS), developed by Apple in 2009, quickly became a favored streaming protocol due to its ability to deliver content efficiently across various devices. HLS segments video into small chunks, allowing adaptive bitrate streaming that adjusts quality based on internet speed, enhancing viewer experience. The protocol’s reliance on HTTP ensures broad compatibility, enabling playback on nearly any device with internet access and simplifying scalability via Content Delivery Networks (CDNs).

However, HLS introduces inherent latency of about 6-10 seconds, potentially reduced with Low-Latency HLS (LL-HLS), but still insufficient for real-time interactions like live sports. Initially limited to specific codecs and proprietary to Apple, HLS has expanded its codec support, though it remains less suited for ultra-low latency applications or direct streaming to servers. Despite these limitations, HLS is widely used for its adaptability and ease of implementation, making it a solid choice for most streaming needs, though not ideal for scenarios demanding immediate data transmission.

Storm Streaming Server & Cloud Support for HLS

While the Storm Streaming Server and Storm Streaming Cloud, in conjunction with Storm Player, do not use HLS due to its latency, it is possible to combine them with third-party Web Video Players such as the JWPlayer, FlowPlayer, or JSVideo to play streams using HLS protocol. Both standard HLS and its newer version, LL-HLS, are supported here.